Soroban Rust Backend Compiler Plugin Deep Dive: Translating Branching Logic

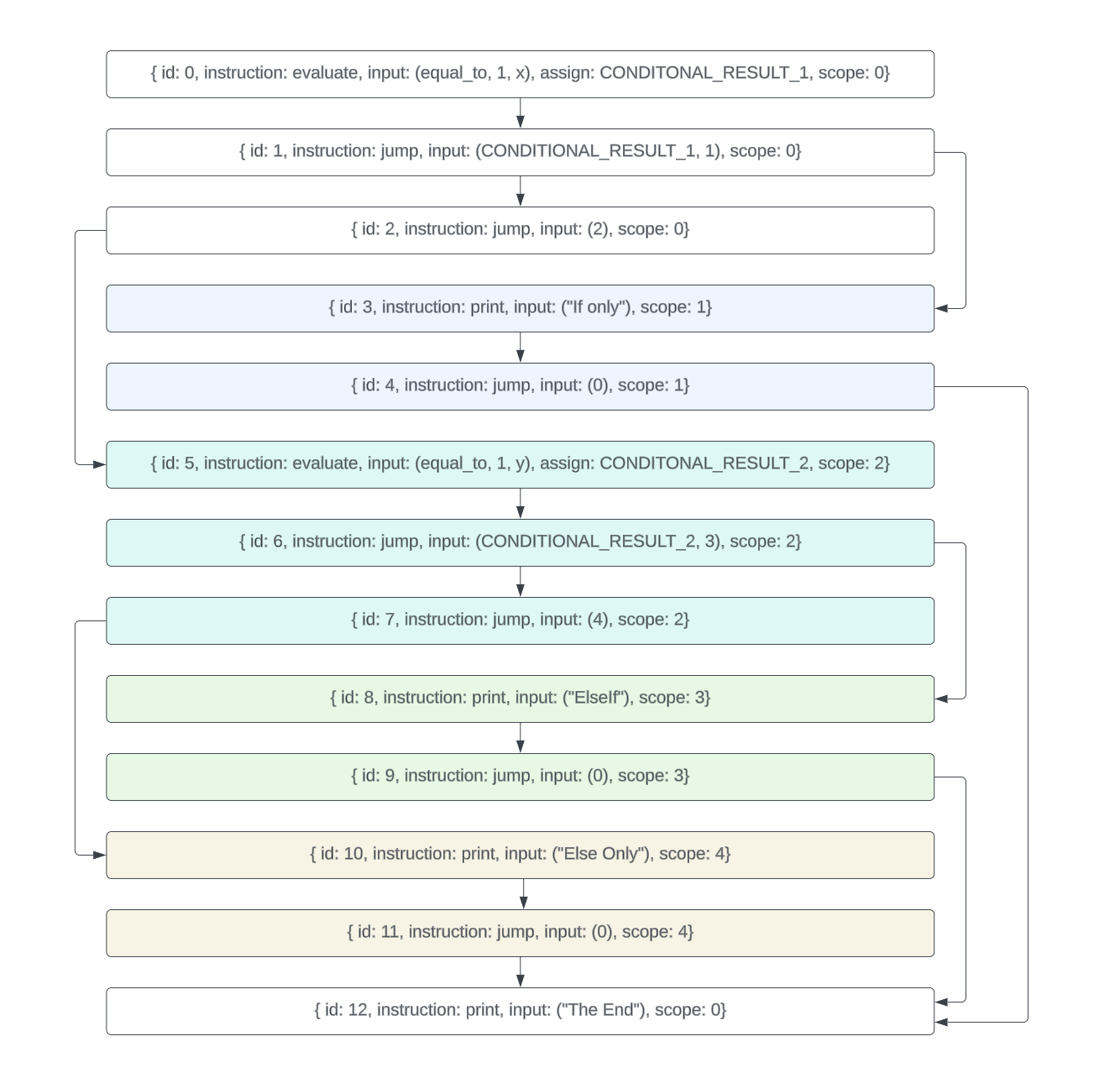

DTR, or Digicus Textual Representation, defines method logic via a set of sequentially executed transactions. DTR instructions have a scope field to represent blocks of contiguously executed code. By jumping between scopes, we can emulate branching.

Consider the following DTR:

{ id: 0, instruction: evaluate, input: (equal_to, 1, x), assign: CONDITONAL_RESULT_1, scope: 0}

{ id: 1, instruction: jump, input: (CONDITIONAL_RESULT_1, 1), scope: 0}

{ id: 2, instruction: jump, input: (2), scope: 0}

{ id: 3, instruction: print, input: ("If only"), scope: 1}

{ id: 4, instruction: jump, input: (0), scope: 1}

{ id: 5, instruction: evaluate, input: (equal_to, 1, y), assign: CONDITONAL_RESULT_2, scope: 2}

{ id: 6, instruction: jump, input: (CONDITIONAL_RESULT_2, 3), scope: 2}

{ id: 7, instruction: jump, input: (4), scope: 2}

{ id: 8, instruction: print, input: ("ElseIf"), scope: 3}

{ id: 9, instruction: jump, input: (0), scope: 3}

{ id: 10, instruction: print, input: ("Else Only"), scope: 4}

{ id: 11, instruction: jump, input: (0), scope: 4}

{ id: 12, instruction: print, input: ("The End"), scope: 0}

Graphically, the flow works like this:

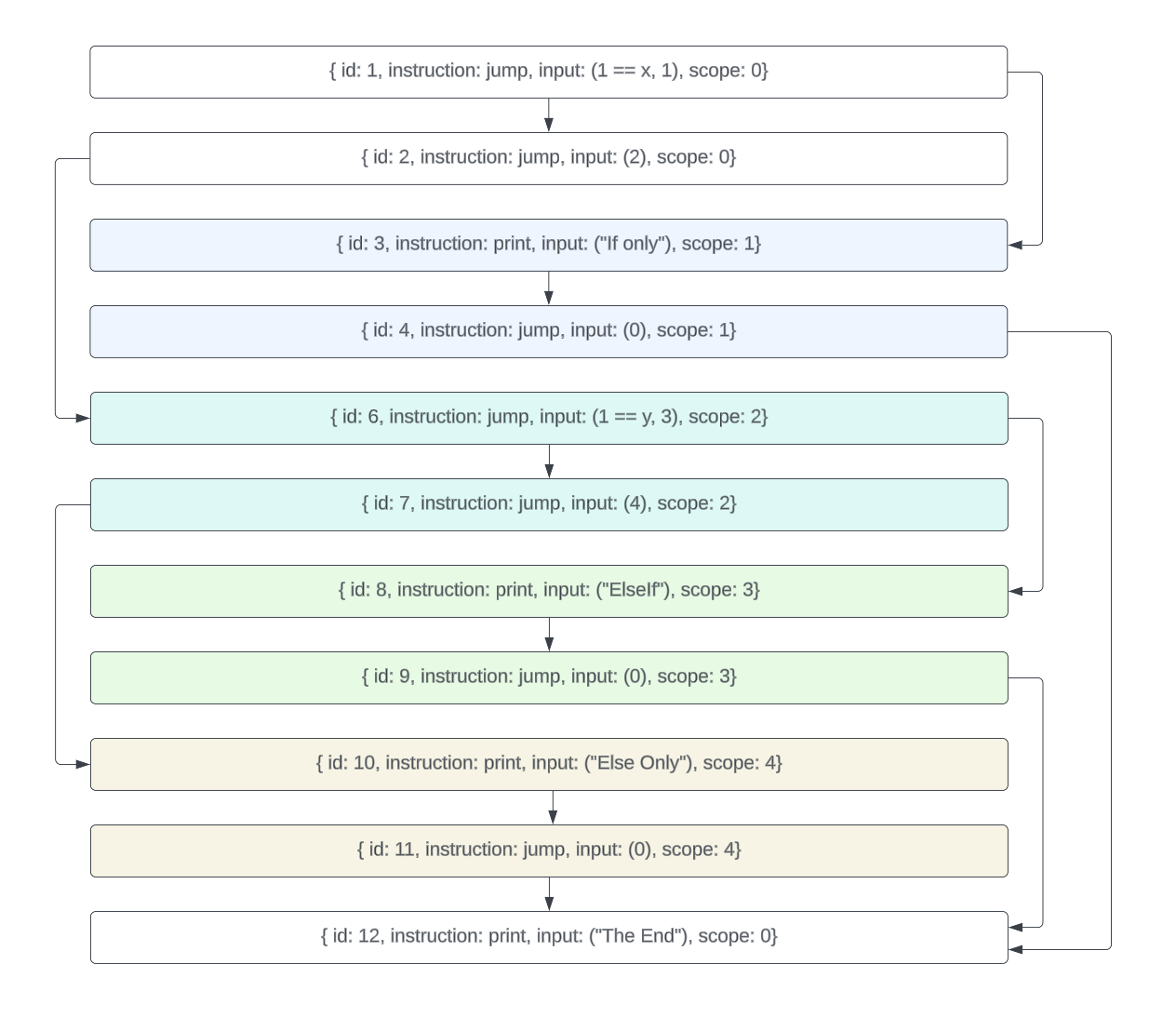

From here, we can condense down the conditional evaluations to get to a slightly easier to consume graphic. Notice that we retain the ids.

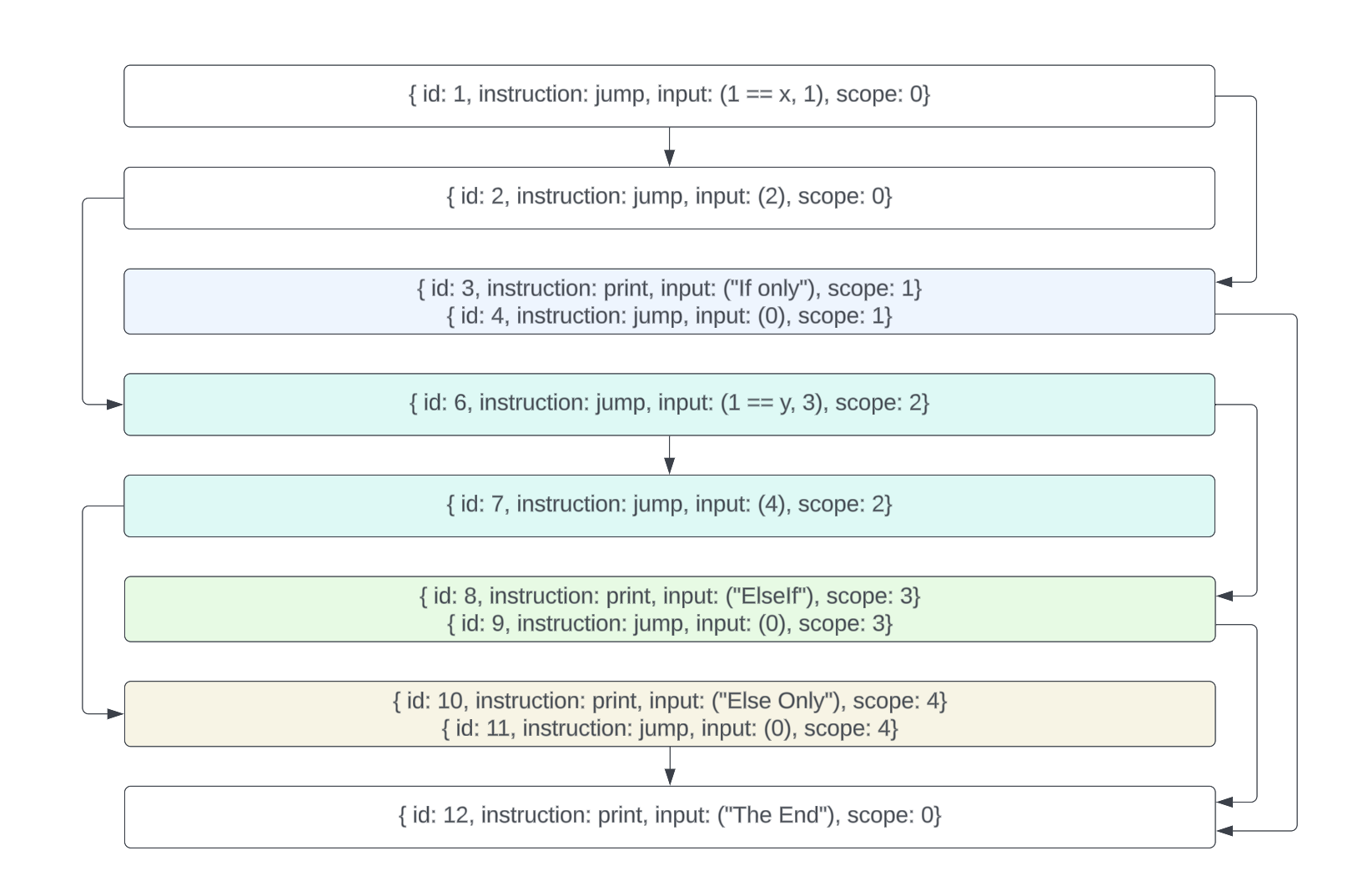

Combining jumps back to 0, we get:

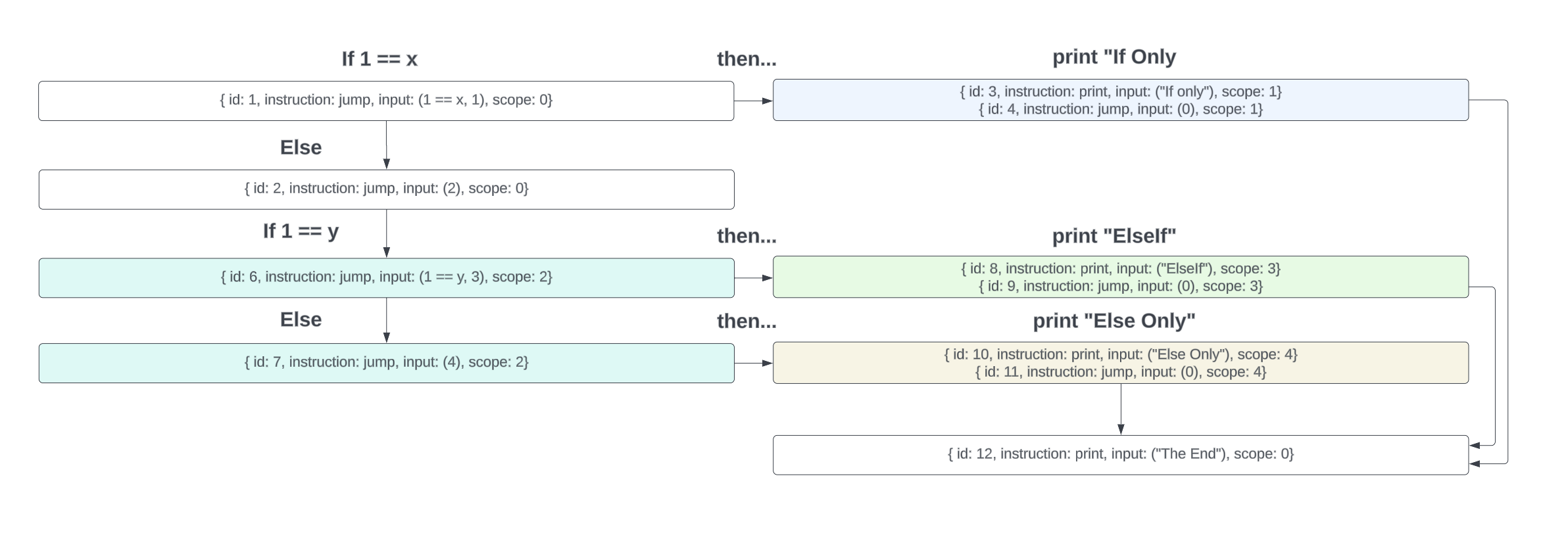

Which can be reorganized with pseudocode annotations:

So the question becomes: how do we perform this (1) condensation, (2) compaction, and then (3) Connection programmatically?

To do so, we’ll construct a directed, acyclic graph. Each vertex in this graph has at most two edges:

- detour: the highest precedence edge to flow through

- continue: the lowest precedence edge

We’ll name this structure a left child preferential binary tree (where the left child is the detour).

This graph will be constructed in three steps:

- Condensation: in this step, we seek to condense the conditional evaluation logic such that we can replace the programmatically generated input variable within the conditional jump instruction. For more elaborate/complex evaluations, this may be a bit of challenge.

- Compaction: combine same scope, sequential instructions into segments. Each of these segments represents a linear execution flow and will be a node in the flow graph.

- Connection: if we operate under the assumption that related conditionals are contiguous (which holds true for the match statement implementation in the

soroban_rust_frontendcompiler frontend), we can iterate through the instructions, linking nodes based on their entry points.

Continuing with our example, we’d get a graph defined as so (where each number is an instruction identifier).

Self: 1

Detour: 3

Continue: 2

Self: 2

Detour: 6

Continue: X

Self: 3

Detour: X

Continue: X

Self: 6

Detour: 8

Continue: 7

Self: 7

Detour: 10

Continue: X

Self: 8

Detour: X

Continue: X

Self: 10

Detour: X

Continue: X

Self: 12

Detour: X

Continue: X

With this, we can generate rust code by traversing our graph based on the precedence defined. Here, (1) is our root, so we will start there. We will explain each step with our current generated code following along below each explanation.

First, start with 1. We generate self and then detour to 3.

// 1

if 1 == x {

At 3 we generate self (indented since we are an inner scope) then we halt since we’re jumping back to 0.

if 1 == x {

// 3

log!("If Only");

// 4

}

Since our detour finished, at 1 we then continue on to 2. At 2 we generate self, then detour to 6.

if 1 == x {

// 3

log!("If Only");

// 4

}

// 2

else {

At 6 we generate self, then detour to 8.

if 1 == x {

// 3

log!("If Only");

// 4

}

// 2

else {

// 6

if 1 == y {

At 8 we generate self, then we halt since we’re jumping back to 0.

if 1 == x {

// 3

log!("If Only");

// 4

}

// 2

else {

// 6

if 1 == y {

// 8

log!("ElseIf");

// 9

}

Returning from 8, back at 6, we continue to 7. We generate self, then detour to 10.

if 1 == x {

// 3

log!("If Only");

// 4

}

// 2

else {

// 6

if 1 == y {

// 8

log!("ElseIf");

// 9

}

// 7

else {

At 10, we generate self, and then halt since we’re jumping to 0.

if 1 == x {

// 3

log!("If Only");

// 4

}

// 2

else {

// 6

if 1 == y {

// 8

log!("ElseIf");

// 9

}

// 7

else {

// 10

log!("Else Only");

// 11

}

// TECHNICALLY IN REAL DTR WE'D have another jump here... typo in the example.

}

All the way back at 1, we sequentially iterate (due to the nature of execution of DTR instruction sets) at scope 0 until we make it to instruction 12. Finally at 12, we mark 12 as visited and generate 12. As this instruction halts, we sequentially iterate, realizing we’re at the end of execution.

if 1 == x {

// 3

log!("If Only");

// 4

}

// 2

else {

// 6

if 1 == y {

// 8

log!("ElseIf");

// 9

}

// 7

else {

// 10

log!("Else Only");

// 11

}

}

log!("The End");

And it’s a success! We’ve designed an algorithm to construct a left child preferential binary tree and traversed it to generate the correct Rust code from DTR. We can leverage this strategy to similarly solve the following branching constructs:

- if-else

- nested if-else

- match statements